CONTROL: protect and empower, the real frontier of our relationship with AI

6 min read 25 February 2025

Who, or perhaps what, should guide or govern AI's role in content creation and dissemination? How do we tap into the immense benefits on offer, while safeguarding what we value and hold true? Our global survey of consumers reveals attitudes are moving towards a more mature understanding of control, away from demands for restriction and towards more sophisticated approaches of oversight.

How do consumers perceive what may be valuable and how do industries, governments, and society exercise the right balance?

AI control matters to people

Consumer demand for control and regulation of AI is clear in the survey data, with sentiment seemingly pointing towards a balanced set of actions by authorities and the AI industry itself.

Throughout the history of human innovation, there have been calls to control the proliferation of new technology. It is no different for AI, as is apparent in the wider press and societal conversations, and as our survey results corroborate. 60% of respondents see a need for regulatory oversight from government authorities, with 26% revealing a concern that AI could even go so far as to pose an ‘existential threat’ to humanity.

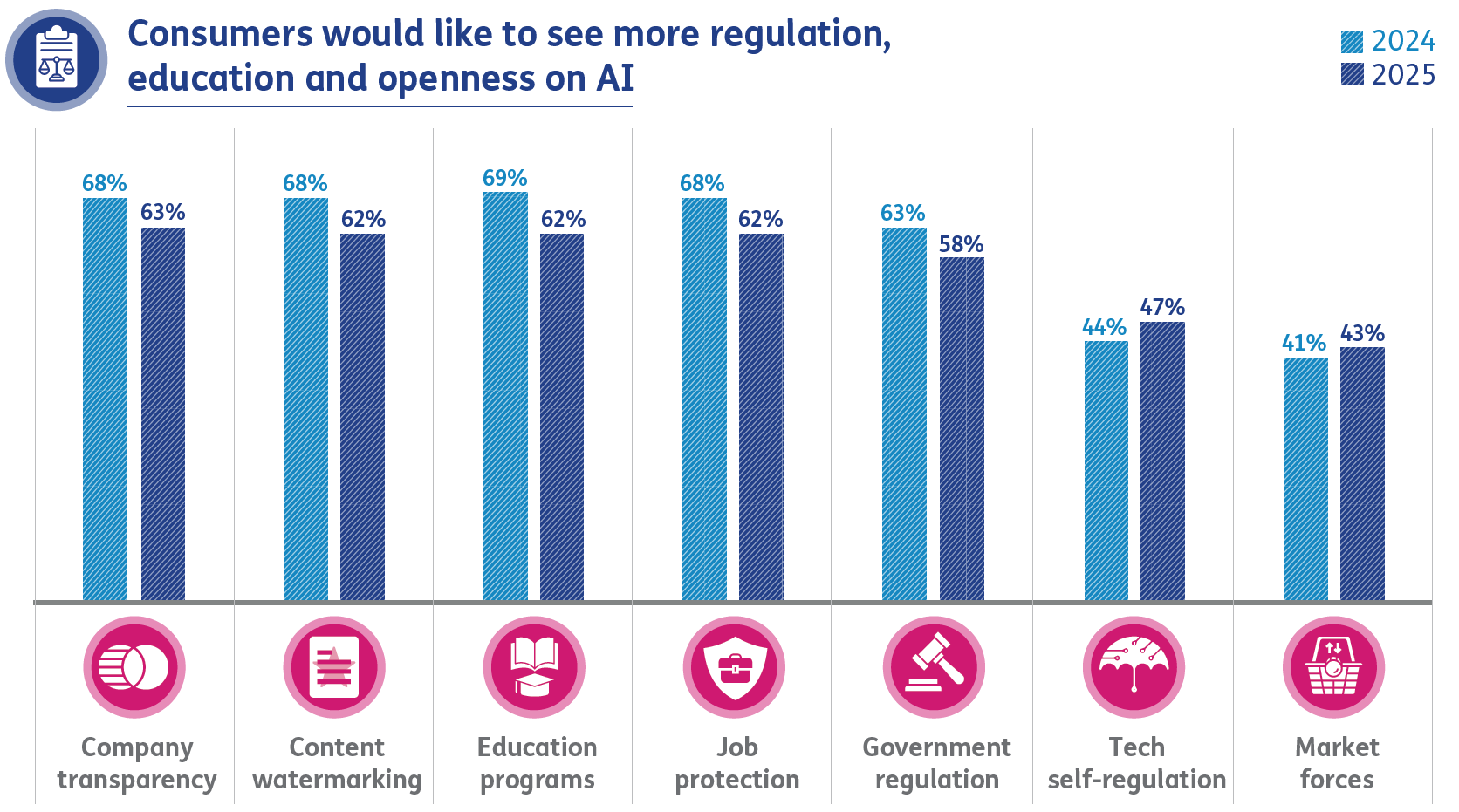

Perspectives vary based on familiarity, but the direction and strength of consumer feeling is consistent. Those least familiar with AI tools are significantly more likely to demand regulation (62%, albeit down from 68% in 2024) compared to those who use AI regularly – although even for this group the findings are pro regulation (53%, down from 56% in 2024).

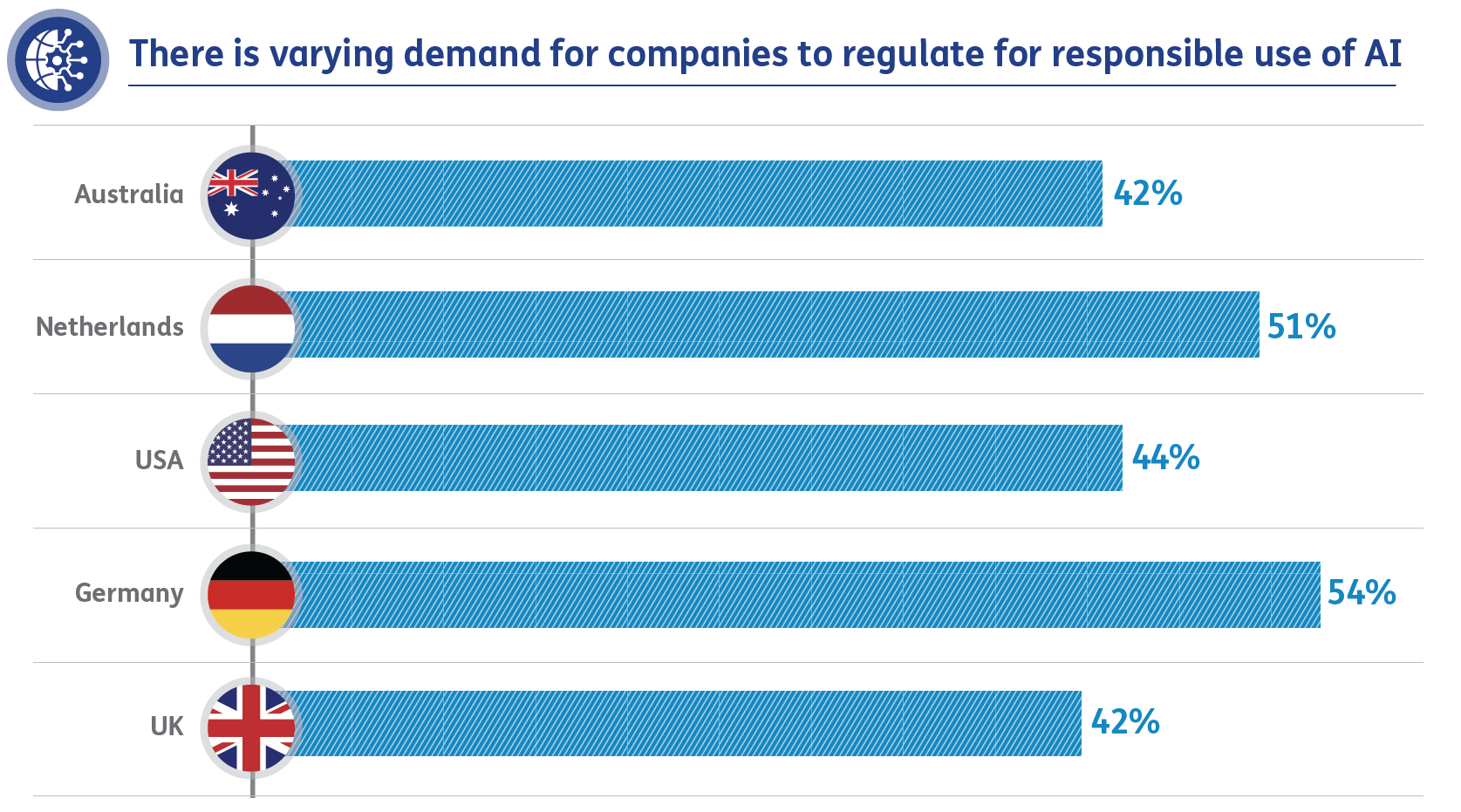

Opinions vary across geography. For example, 54% of respondents in Germany expressed a desire for technology companies to regulate their use of AI responsibly, compared to just 42% in the UK. 49% of Australian and 42% of British respondents are concerned about AI replacing human jobs, while the Germans and the Dutch (34% and 31% respectively) appear more accepting of AI’s role in creative processes.

Interestingly, the English-speaking countries of the US, the UK and Australia are markedly more concerned with AI passing as ‘real’ or being misleading or displacing jobs than respondents in Germany or the Netherlands are. It is also interesting that for all countries in the majority of categories, concerns about AI have eased between 2024 and 2025.

On such limited data it is impossible to be definitive, but perhaps these patterns reflect the different historical and cultural contexts for new technology. Europe tends towards a preference for balanced oversight, combining innovation with strong institutional frameworks. The US has historically thrived where innovation has wider degrees of freedom, away from the perceived stifling effect of outside interests, bureaucracy or regulation.

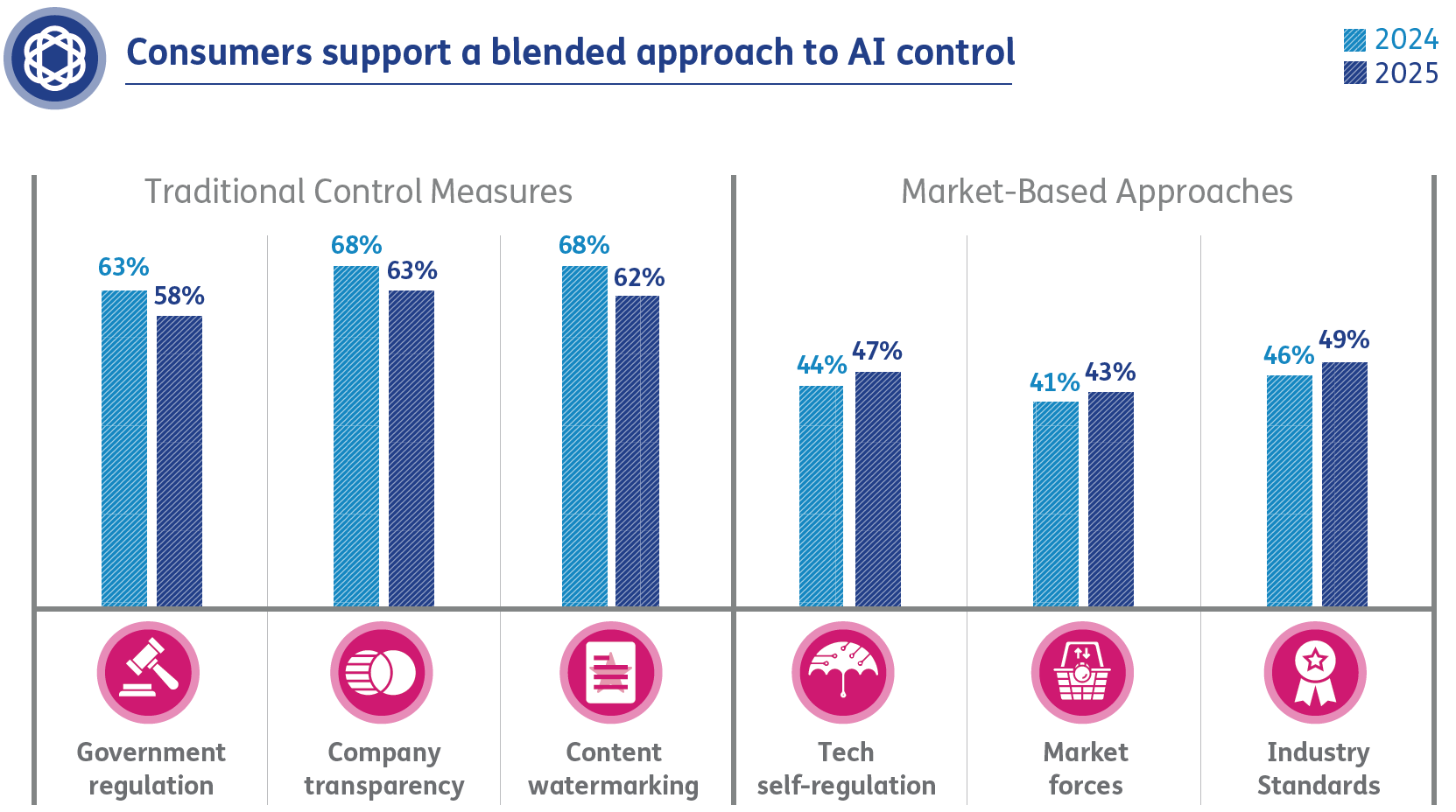

In terms of attitudes to AI control, our survey data reveal a stronger call for traditional, compliance-based measures but also a shift away from these to more market-based, industry and self-regulating mechanisms. Perhaps herein lies an attempt to see balance and coherence between regulation and standards which industries take responsibility for and where market forces align controls with consumers interest in seeing those controls be effective.

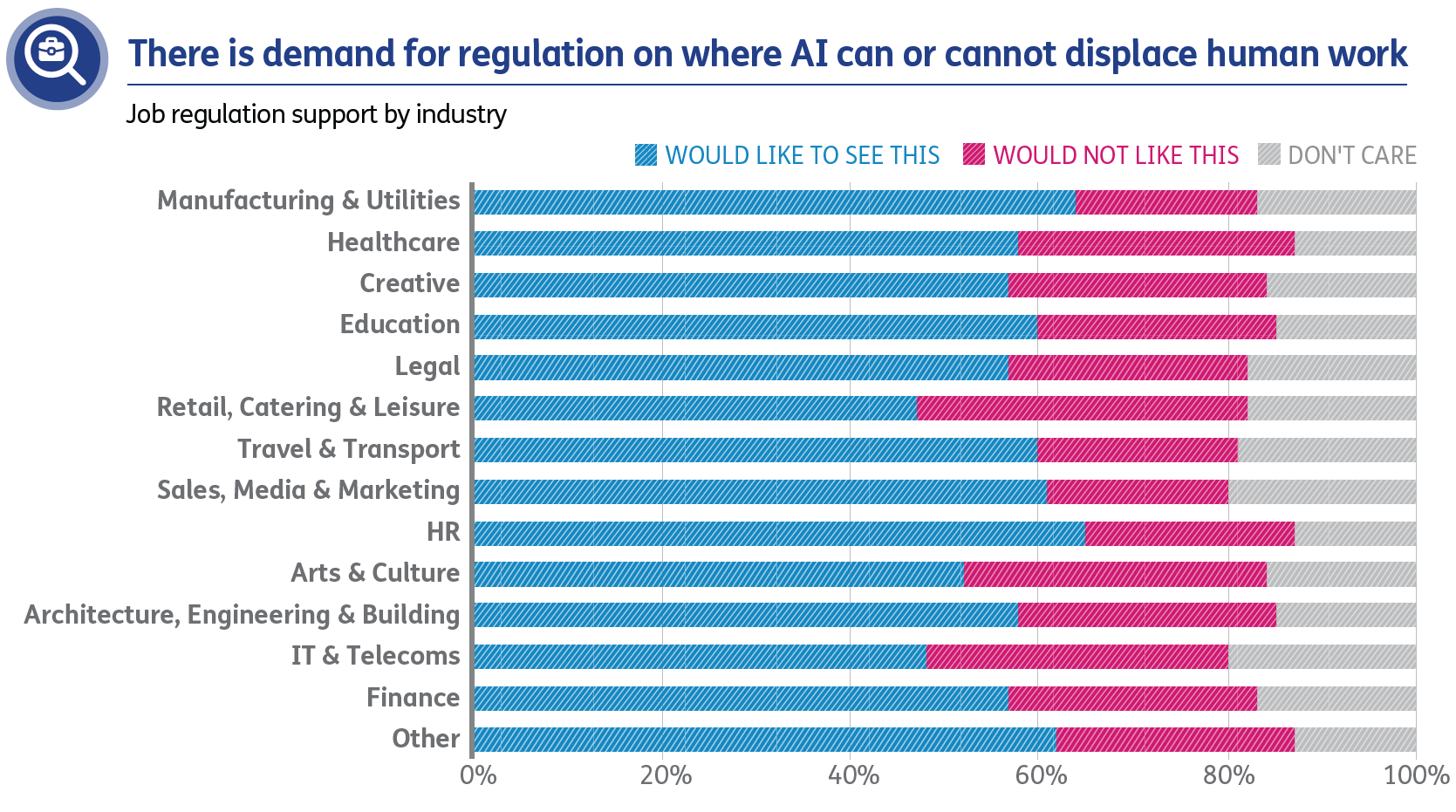

There is a significant difference in responses by age group in relation to AI impact on jobs. When asked what they’d like to see in terms of regulation, job protection was most the important factor for the 16-24 year old age group, at 49%. However this age group was also the least keen on regulation being the government’s role (42%, versus the highest of 70% for those aged 55).

When asked whether they would like to regulation of AI’s propensity to displace human work, a significant 50-60% of respondents would be in favour. Results vary by the industry in which respondents work. Those sectors where one may see physical products or facilities seem to have the highest demand for regulation, and perhaps it is understandable that those in the Legal or HR sectors are the most against this form of regulation. Dare we suggest that the burden of such regulation would fall on these respondents?

In overview, the demand for control and regulation speaks loudly and clearly in the survey data, even if it is not a universal or a one-size-fits-all approach. The sentiment towards balanced and collective action from governments and companies is reflected in some of what we see in the market, even if it’s not yet as joined-up as it could be. For example:

- Frameworks like the European Union’s AI Act provide a roadmap for responsible AI governance. By categorising AI applications by risk level and setting stricter standards for high-risk uses, such as hiring or law enforcement, the Act fosters transparency and accountability.

- AI technology companies like Anthropic are also proactively leaning in to participate in how AI is responsibly controlled. Responsible Scaling Policies (RSPs) call for safeguards such as rigorous testing and monitoring of advanced AI systems before deployment. Anthropic’s push for stronger US regulations reflects a growing industry awareness of the need for proactive governance to mitigate downstream consumer and commercial risks.

Education truly empowers us as consumers and society, and is a big missing piece of the current puzzle

Education is one of the factors that can be used to address gaps in AI understanding.

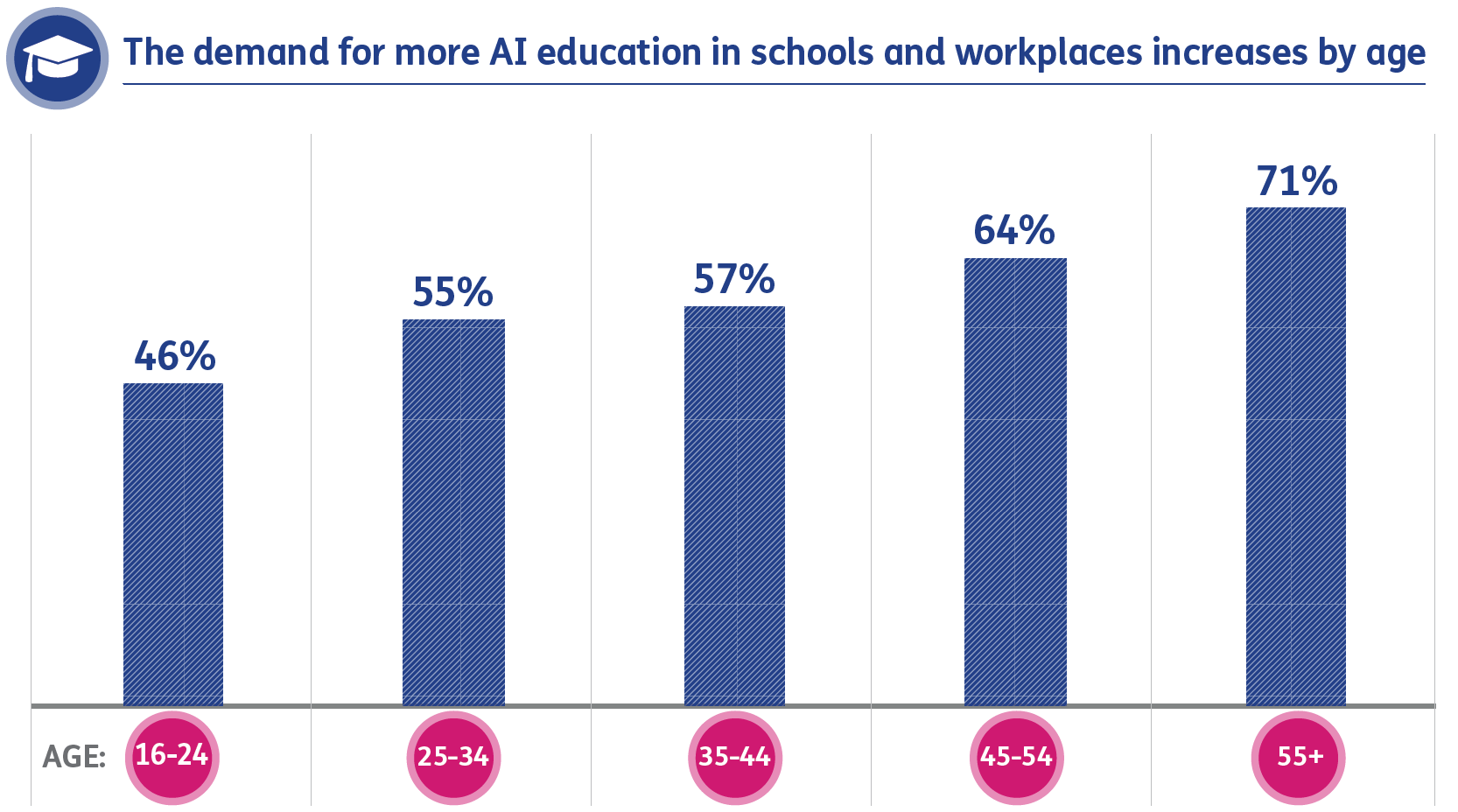

In the survey, almost two-thirds of all respondents would like to see more education on AI in schools and workplaces, including on what it is used for. This demand rises with age group, but is also high for 16-24 year olds at 46% (with a significant share of the remainder not having a preference either way).

Education is a very interesting dimension to our survey, and perhaps is one that may warrant further, deeper exploration in future. Throughout our survey results we see respondents expressing concerns about that which they may not yet have experienced, being distrustful of the technology itself or being unsure to even express a preference.

For example, 34% of respondents expressed a concern that AI is ‘unknowable,’ ie that we do not know the reasons for a particular decision and that this not knowing is worrisome. Once again, the opacity of AI as a technology represents a trust and adoption challenge.

Addressing awareness and knowledge gaps through meaningful education can empower people to engage more confidently with the world of AI, with what it is and isn’t, but also what we want it for and what we do not. Without the right education, not just for schools or technologists, but for our wider society and decision-makers, how can we even know the questions we have in front of us, let alone the paths we may then choose?

Conclusion: collective action and education empowers AI to its full potential

Taken together, the survey results reflect a growing maturity in our relationship with AI’s use in content:

- An overall demand for regulation and control of AI technology

- A more subtle picture under this in terms of people’s familiarity with AI, age or cultural practices and geography

- An emerging sentiment towards a balanced suite of interventions from government or authorities and the role of self-regulation and market forces

- A recognition and demand for greater education on AI, both explicitly in workplaces and schools but also tacitly through our observations of wider survey findings

High support for AI education and transparency suggests that, for consumers, control isn't just about restrictions, it's about empowerment. Forcing carmakers to build cars with seat belts was a start. Having drivers and passengers truly understand why this matters and to be empowered to actively choose to ‘clunk-click every trip’ changes behaviour for generations. What followed, from airbags to bicycle helmets to safe sex, from nicotine patches to the use of PPE, all flowed from the same insight. Understanding empowers our self-interest, to the benefit of consumers and societies.

The path forward on AI is not straightforward, in part because the technology has developed so quickly and in ways that can be unfamiliar to convey to non-AI natives, and in part because there can be a commercial self-interest in not lifting the veil on practices or usage.

Our survey respondents may point to a future of AI control that lies not in choosing between regulation and freedom, but in more informed and balanced sets of self-interest, with both governments and the AI industries aligned behind us, the human beings that AI is meant to be in service of.

For creators and companies, perhaps the key questions ahead are:

- How are you engaging with the debate on AI control and regulation?

- What concerns do your own customers or audiences have, and are you doing your bit as a participant or industry, and to what purpose?

- Is educating your customers (and maybe your employees or society more widely) one of your biggest opportunities? If you aren’t doing that, will someone else be and where does that leave you?

◀ Previous article |

Introduction ▶ |

Our Experts

Related Insights

Truth: people value what they know most - other people

Consumers prefer human-created content for its authenticity and personal touch, highlighting the risks for companies ignoring these values in the age of AI.

Read more

Quality: a price to be paid, or risk a race to the bottom

AI's role in content creation challenges traditional quality measures, with consumers valuing human touch but reluctant to pay more for it.

Read more

Trust: transparency earns trust, and right now there isn’t enough of either

Generative AI is transforming content creation and perception, from text and images to music and AI personas. How do we trust what we see, hear, and experience now?

Read moreIs digital and AI delivering what your business needs?

Digital and AI can solve your toughest challenges and elevate your business performance. But success isn’t always straightforward. Where can you unlock opportunity? And what does it take to set the foundation for lasting success?