TRUST: transparency earns trust, and right now there isn’t enough of either

6 min read 25 February 2025

Generative AI is reshaping not only how we produce and perceive content, but also the content itself - from text and images to fully published works and reports; from music and voice emulation to fully AI-generated personas and personalities; from user-generated content to auto-generated, real-time, algorithm-adaptive content. What does it now mean to place our trust in what we see, hear and experience?

Most people want greater transparency on AI’s use

When it comes to AI-generated content, one thing is clear: more than two in three consumers want to know when AI is being used.

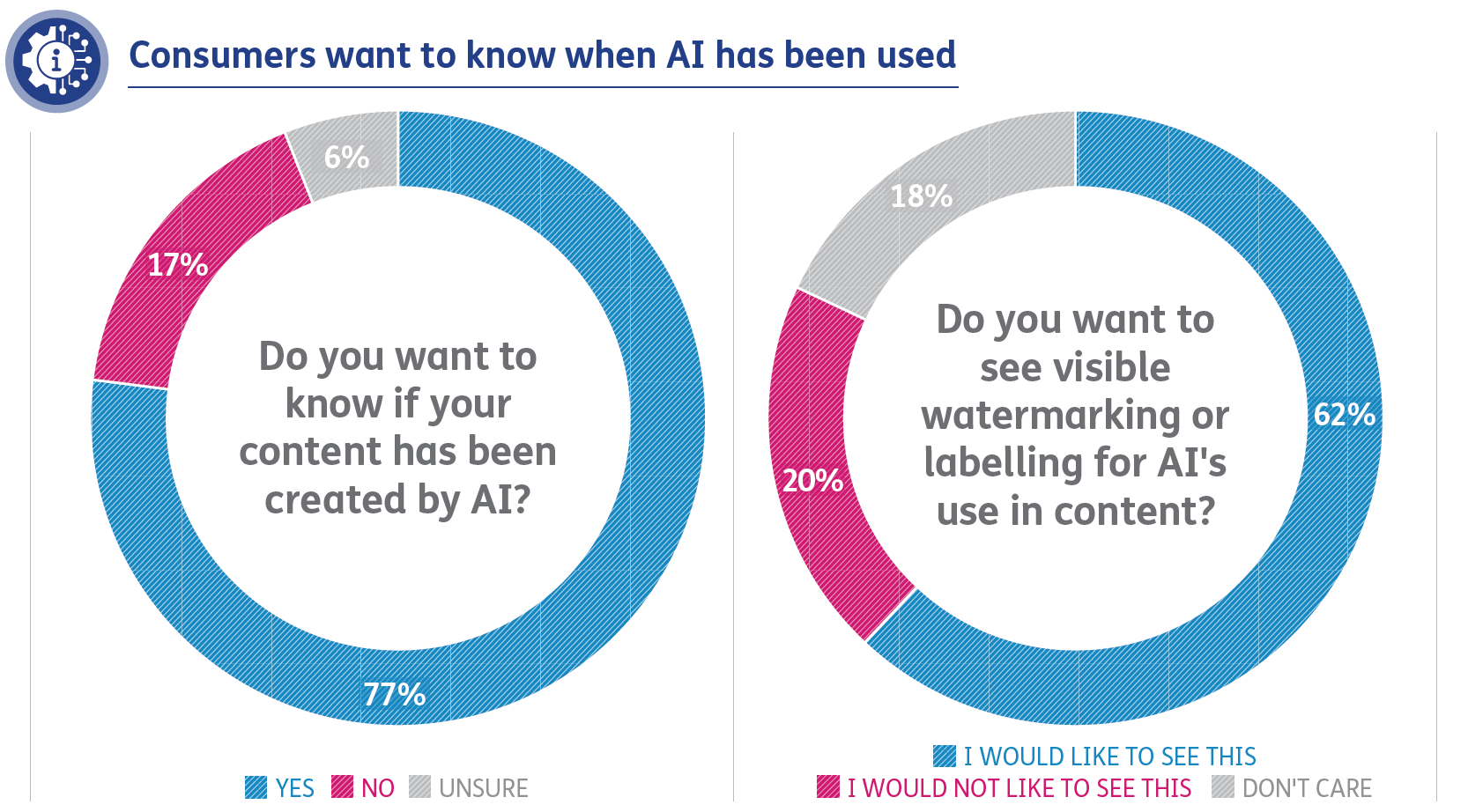

In Baringa’s survey, 77% of respondents would want to know if their content had been created by AI, either in whole or in part. Only 12% would not care and 5% would prefer not to know (with 6% unsure).

31% of respondents go even further and would not be happy to even consume content without knowing whether it had been generated by AI, and a further 41% would have reservations in doing so. Only one in four would be happy to consume content without knowing whether AI played a part.

Interestingly, the demographic data reveals that this is not a simple case of differences between young and old. The 16-24 year old group is the most likely to use AI, but with reservations, and are less likely to be happy not knowing if AI is used than the older 25-44 year old groups.

Transparency seems to matter. Given that 86% of respondents believe that AI will play a role in some form in most content creation, now or in the future, so this desire for transparency becomes a potential significant issue. 63% of respondents would like to see creative companies being open about the AI they use. 62% support the idea of using visible watermarks or labelling to clearly indicate whether or how much AI has been used in content, three times the number of respondents who would not want this (20% have no preference either way).

This push for transparency isn't about labels, it’s about trust. Audiences feel entitled to understand the origins of the media they consume. It's not about rejecting AI outright but rather being given the information as to when AI is part of the equation. This statistic underscores a broader anxiety about misinformation and what may be trustworthy.

In factual, non-fiction content (such as in journalism or reference content such as textbooks or biographies) 48% of respondents would not trust AI (either at all or very much) to be used in the creation of that content compared with only 12% who would fully trust that content. This tends to be more pronounced for female respondents than male, and is a trend exhibited across geographies, with US respondents tending to be more polarised in their strength of feeling than in other geographies surveyed.

For creative content, such as music, films and fictional books, 32% of respondents would be uncomfortable with content wholly created by AI and would choose not to consume it. 38% would proceed but be uncomfortable and have reservations.

Without it, people can’t actually spot the difference

In a simple A or B test, we see that most people can’t distinguish between human and AI-generated text or imagery. In fact, their performance is poorer than a coin toss.

One of the most interesting results from our survey was that the majority of respondents were not good at distinguishing between human-generated content and AI-generated content. We won’t claim statistical validity, but when asked to identify the originator of stills images and what would be (or purport to be) Shakespeare’s own text, respondents were correct less than 40% of the time. This was the same across all age groups. Random or a coin toss would have been more accurate.

The results of our light-hearted human versus AI identification tests do demonstrate the rapid advance and sophistication of generative AI tools available today - not just available, but freely and ubiquitously available. If this is what we can all do, then what can an advanced technology company or state do?

If generative AI is good enough to be convincing as original or human, then does it matter where the content came from? Is the idea of a human essence or value just an illusion or an anachronism? After all, you like what you like. That is surely the essence of the creative world?

But while this technological mimicry is undoubtedly impressive, it raises significant concerns. How can consumers feel that they can make informed decisions if they cannot tell who or what is shaping that content? Is the photograph or news item or point of view adapting itself to me, before my very eyes. Do I see what others see? Like a Schrodinger cat experiment, is the content made to exist in one form, or indeed changed, by the mere fact that I am looking it?

The prospect of misinformation and manipulation, intended or otherwise, is at the heart of consumers’ trust apprehensions.

Survey respondents expressed concern over the potential for AI to deceive audiences, particularly when it comes to sensitive or political content. ‘Misuse’ was ranked as the number one concern about AI generated content (46% of respondents) with ‘misinformation’ a close second (42%), topping the list of consumers’ concerns in both 2024 and 2025 surveys.

Interestingly, 43% of participants indicated that they were ‘very’ or ‘fairly’ confident that they could accurately identify AI-generated images, an 8% increase in confidence from the 2024 survey. Yet despite this confidence, the results in both surveys were identical – less accurate than a coin toss and actually getting worse (31% correct in 2025 compared to 24% in 2024). The ‘very confident’ cohort fared better than average, but still not as good as a coin might be - only 42% accurate in 2025, and 40% in 2024.

Without being told where AI plays a part in content, people struggle to discern it for themselves. The risk is that since we have already explored how consumers place value on human truth and quality attributes, they may be susceptible to taking content at its face value. This can be especially problematic where authenticity matters.

Of course, content being human-created doesn’t guarantee it as being accurate or factual or free from bias and manipulation. Of the concerns noted by respondents about human-generated content, the potential for human error, bias and prejudice rank as the top two concerns for consumers (33% and 32% respectively, albeit both lower percentages than in 2024).

Trusting AI matters, for all and for future

Most people agree that AI can play a major role in our future, but many believe more must be done for AI to earn our trust.

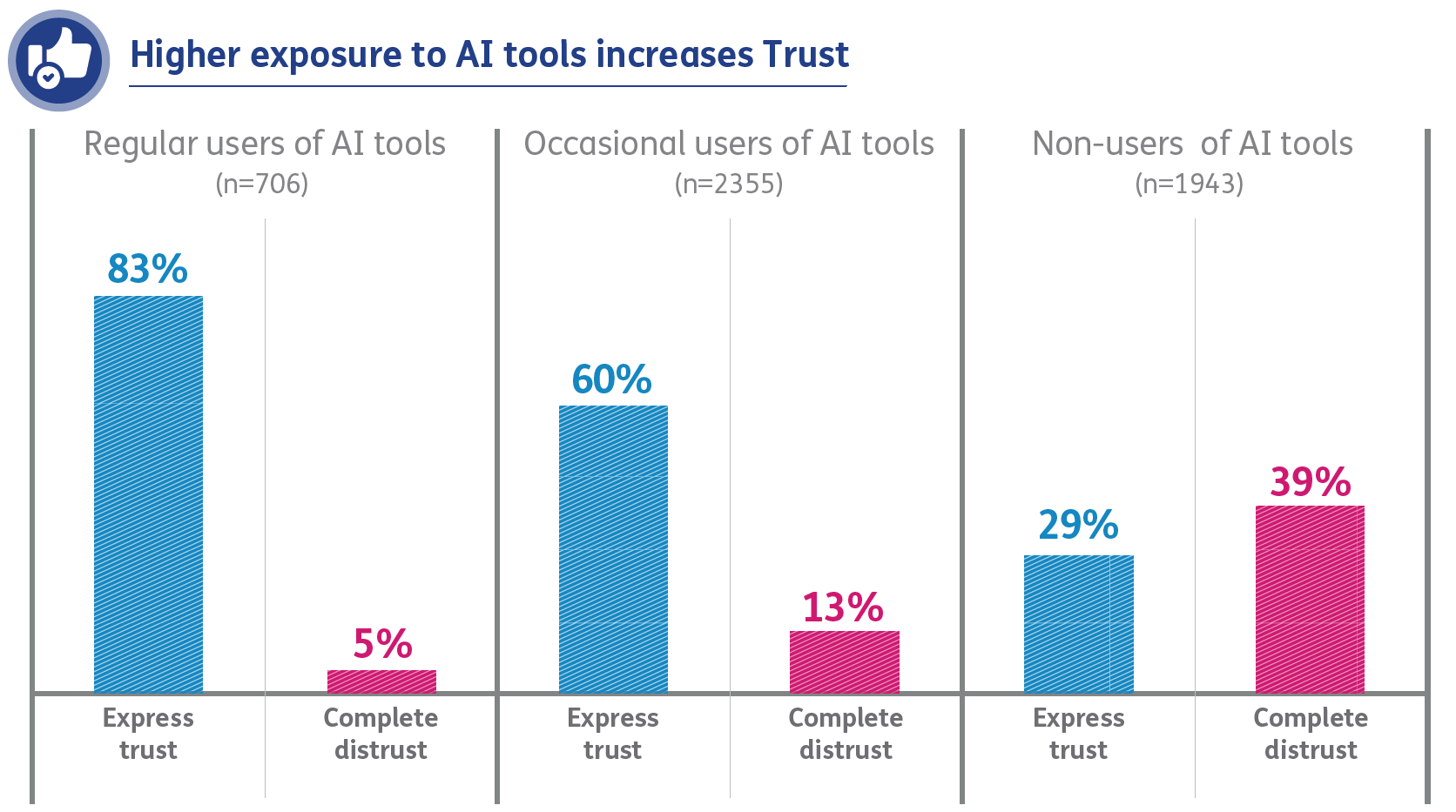

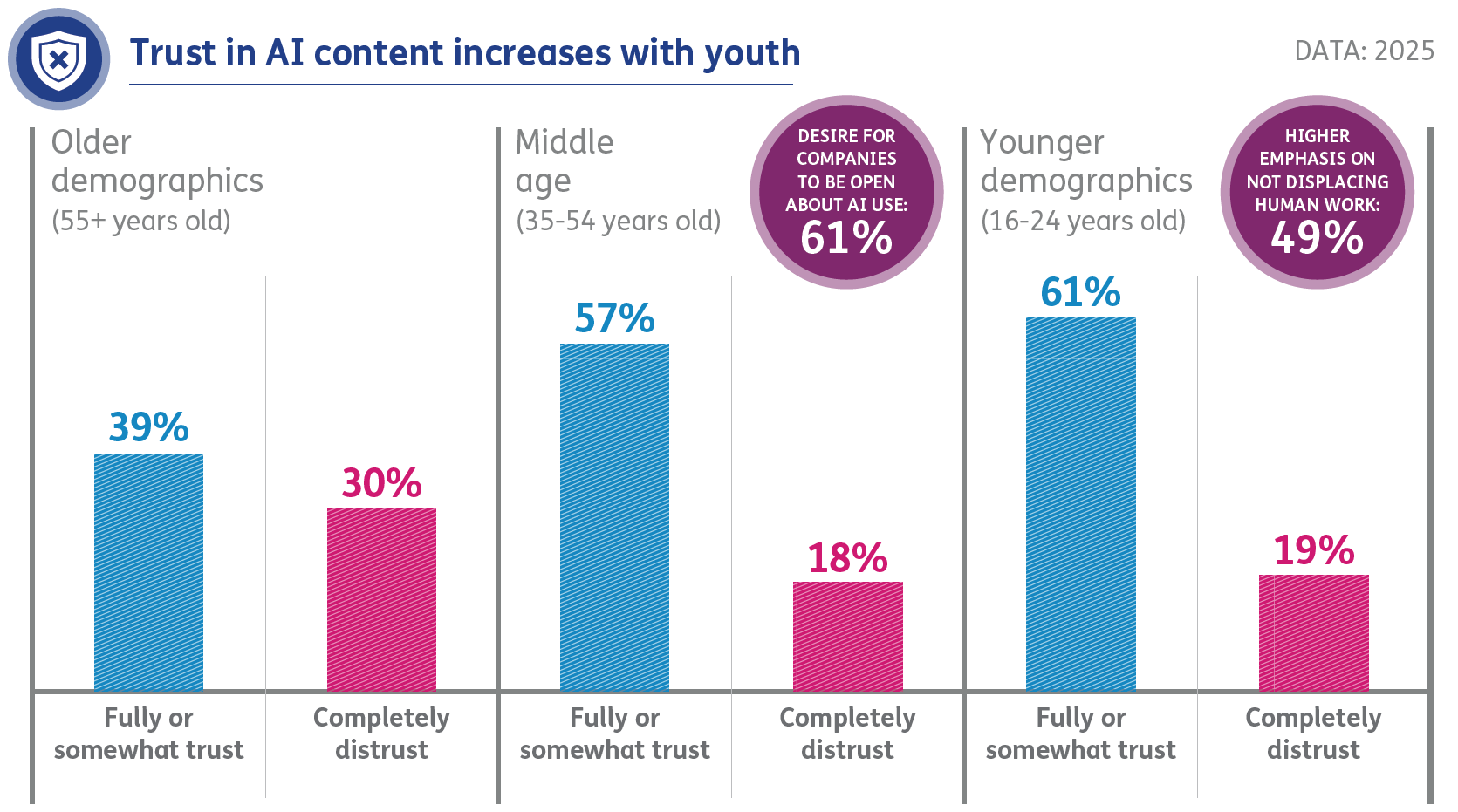

The survey results indicate that levels of trust correlate well with levels of AI familiarity and experience, and that levels of distrust correlate with the age of the respondent

These differences not only tell us something about trust formation through experience, but also that there may come to pass a potential polarisation in society, between those who use AI and build their own positive experiences and those who don't, and may actively choose to avoid it.

The data indicates that even occasional exposure to AI tools significantly impacts trust levels, although whether this perceived use and familiarity is directly applicable to what consumers trust is debatable. Does occasionally asking questions to Chat GPT or asking AI for music recommendations mean you now trust AI to shape the content you get on your smartphone or are happy that the next pop star you really engage with doesn’t exist in real life?

We can infer that older generations grew up in a world where informational content sources were fewer but more verified, where the path from creator to consumer was clearer. Higher levels of distrust may stem not from resistance to technology per se, but from ingrained expectations about content authenticity and verification. Among older groups is the highest propensity to favour labelling AI content, at 72%.

Younger generations have grown up navigating a digital landscape, where source verification is a constant backdrop to what we see and hear. Perhaps higher trust levels reflect not a naivety or gung-ho faith in technology, but a more sophisticated understanding of how to evaluate content in a mixed human/AI environment - to filter and judge content on its merits rather than its origin, having developed better strategies for evaluating and cross-referring information, regardless of source.

Conclusion: trust is essential for AI to improve our world, and that means a push on transparency and information

AI holds immense potential to transform our world for the better. But for this future to be realised, AI needs to earn our trust.

Given that our own ability to determine if AI is behind our content is less accurate than tossing a coin, what does a responsible approach to trust in AI look like?

For our respondents, it starts with transparency, with companies being open about when and how they are using AI and with an ethics-based approach and guidelines for usage, especially when it comes to content that could be manipulated or mislead.

It also requires an education and experience with the technologies themselves, not just to promote healthy adoption and better strategies for discerning, filtering and using content, but also to safeguard against the risk of bifurcations in society. The prospect of self-referential echo-rooms where the AI-infused and the AI-allergic grow ever further apart is a real one.

The message is unmistakable: trust is essential for AI’s seamless and valued integration into our world. Trust is built not by concealing but by informing. AI transparency may need to go beyond compliance requirements or being a nice-to-have; it may come to be a foundation for consumer trust. At present, our survey respondents tell us there's no room to be complacent.

For companies and creators, the path ahead has clear questions for the here and now:

- What is your strategy for AI and its transparency to your customers, or wider stakeholders?

- How are you educating customers on the application and very real benefits of AI, and how are you preserving their interests in this fast-moving landscape?

- What risks are you exposed to, today or soon, in terms of where you are deploying AI technology or where competitors may be about to disrupt you?

- How will you build trust in this AI-driven content world?

◀ Previous article |

Next article ▶ |

Our Experts

Related Insights

Truth: people value what they know most - other people

Consumers prefer human-created content for its authenticity and personal touch, highlighting the risks for companies ignoring these values in the age of AI.

Read more

Quality: a price to be paid, or risk a race to the bottom

AI's role in content creation challenges traditional quality measures, with consumers valuing human touch but reluctant to pay more for it.

Read more

Control: protect and empower, the real frontier of our relationship with AI

Our global survey shows consumers now favour sophisticated oversight over restrictive control, reflecting a mature understanding of AI governance.

Read moreIs digital and AI delivering what your business needs?

Digital and AI can solve your toughest challenges and elevate your business performance. But success isn’t always straightforward. Where can you unlock opportunity? And what does it take to set the foundation for lasting success?