Navigating AI governance for energy companies: why a holistic enterprise model is key to compliance

10 min read 31 March 2025

As energy companies increasingly look to explore and adopt artificial intelligence (AI) as a major enabler of their strategy, they must navigate a rapidly evolving policy and compliance landscape. Regardless of the final regulatory outcomes, a minimum level of AI governance and control will be expected from UK energy organisations. Rapid, organic AI implementations with limited oversight will most likely lead to significant technical and policy debt, which may be complex and costly to address retrospectively and, in the worst case, could result in substantial fines.

In this article, we share Baringa’s recommendations for approaching the challenge and harnessing the power of AI, while ensuring compliance and mitigating risk.

Ofgem’s consultation on AI guidance in the energy sector

In 2023, the UK Government Department of Science, Innovation and Technology (DSIT) released the ‘Pro-Innovation Approach to AI regulation’ white paper The paper directs regulators to regulate AI within their sectors in keeping with core principles for AI regulation. Ofgem, the energy regulator for Great Britain, launched a public consultation on their draft guidance about AI usage for the energy sector in December 2024. The aim was to begin definition of the regulatory framework around AI governance to manage risks and enable adoption of AI in the sector.

This guidance is relevant to all energy market participants, energy suppliers, networks companies, energy producers including renewables businesses, trading companies, and central industry bodies, as well as software or AI solution providers for the energy sector.

Guidance and regulation

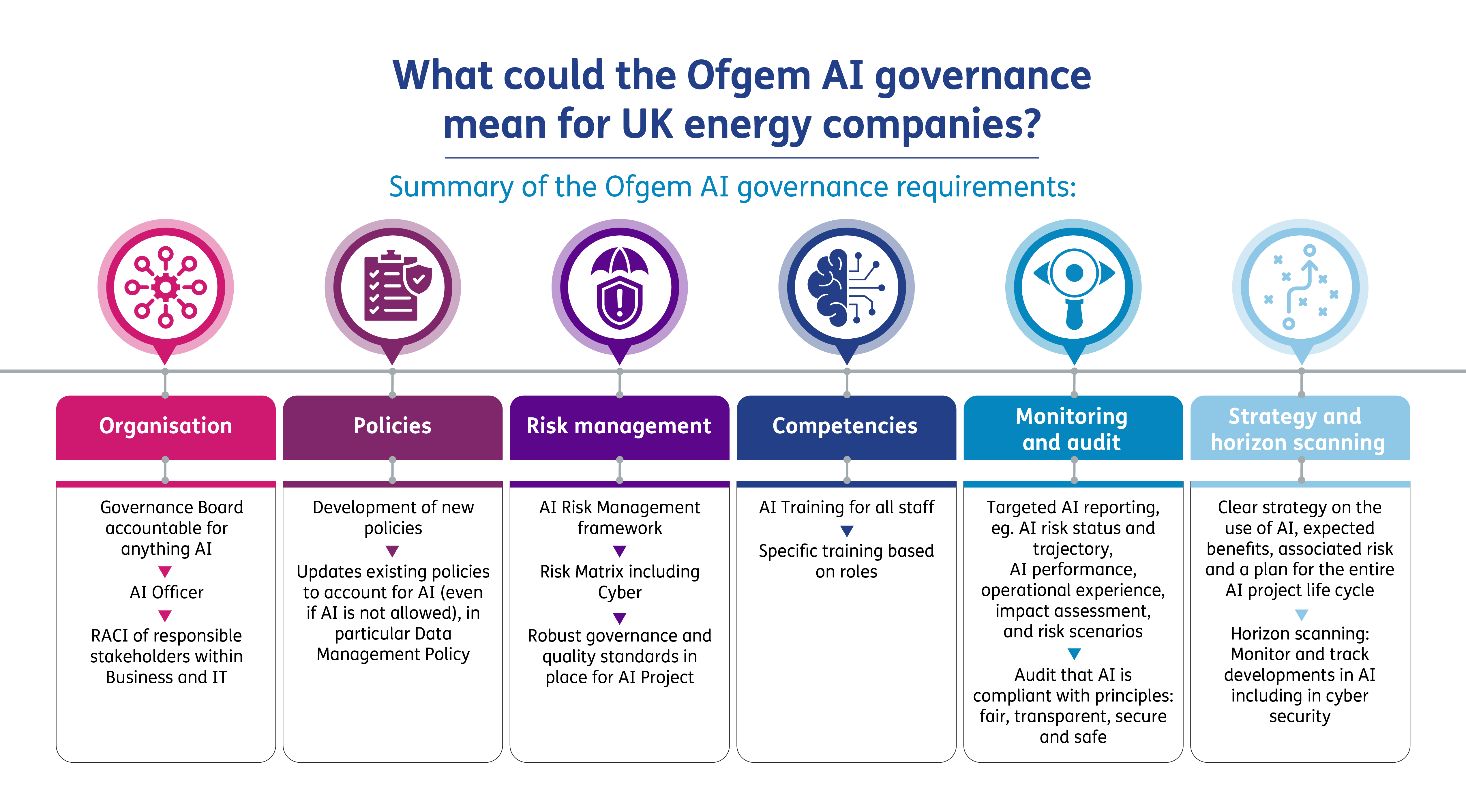

Ofgem believes the current energy regulatory framework is adequate to address AI-related harms, with the AI guidance serving as a supplementary roadmap. It outlines proportionate good practices in AI governance, risk management, and competencies overlapping with other regulatory areas, such as data protection and health and safety.

The final AI guidance for the energy sector is expected to be published in spring 2025. This means that licensees and regulated energy organisations must continue to comply with the existing regulatory framework regardless of their usage of AI, by establishing and maintaining appropriate processes, systems and governance. Financial penalties for the misuse of AI and breaches of regulatory obligations due to AI use will be enforced under the existing energy regulatory framework.

Ofgem's guidance emphasises technology-agnostic good practice rather than best practice, focusing on high-risk uses and scenarios and aiming to balance AI opportunities with consumer protection. It is expected that the guidance will be reviewed and adapted as AI evolves and risks are better understood. Hence, it will be essential for energy companies to assess the size of the risk in order to know where and how to apply the guidance.

Different areas of the energy sector also face varying levels of risk. For example, nuclear generation and energy distribution have high physical and environmental risks, energy retailers face data privacy risks, and trading companies deal with financial risks. How the guidance will be applied across these diverse risks is unclear for now. Our understanding is that this guidance is above all a placeholder, and that companies with ambitions to leverage AI early or at scale should understand and consider implementing best practice early in higher risk areas and ensure that good practice is adopted quickly for the other areas.

We believe that Ofgem’s proposed approach to “wait and see” before modifying the current energy regulatory framework is sensible and proportionate, given that it is not Ofgem’s intention to directly enforce the implementation of the guidance for energy companies, for example, by assessing and impose preventative measures.

We expect most energy participants to follow the guidance, as it sets a minimum standard to include as part of their risk management governance, with leading practices to be seen as a long-term goal to strive for. For non-regulated and non-licenced energy businesses, such as technology companies, direct compliance enforcement by Ofgem will be near impossible, however the supply chain effect will enable to enforce this indirectly.

In any case, this guidance should not be looked at in isolation from the wider regulatory context around AI.

Cross-sector view and wider international regulatory context

For many energy companies, AI is seen as a major risk but also a major opportunity to drive increased value over the coming years of the energy transition. Demonstrating safe and compliant exploration and deployment of AI to energy company boards and shareholders will require dedicated efforts to keep up with regulation, especially as the government is advocating for an accelerated roadmap to AI adoption across all sectors.

Since Ofgem’s AI guidance consultation launch, there has been a new publication by the UK government on the topic. The Clifford Report, published on 13th January 2025, outlines a bold plan with specific short- and longer-term actions to fulfil the ambition for Britain “to lead the AI revolution”, such as reforming some existing texts and regimes and collaborating with top AI companies, academics, and entrepreneurs. The government also acknowledges the current pro-innovation regulatory approach as a strength for AI adoption. However, the report suggests that if ambiguous regulatory guidance seems to impede progress, a central body could be established to promote and pilot AI innovations across various sectors.

Internationally, the EU AI Act is a cross-sectoral regulation that will apply to any companies providing or deploying AI systems into the EU. It is expected to be fully implemented by September 2026. Many energy companies that also operate within the EU will be-in scope for the act, which sets out specific activities for organisations that are using certain high-risk AI systems. The Act is currently considered as the “gold standard” in terms of governance and risk management. Compliance with the act is mandatory, with high fines for non-compliance – up to €35 million, or 7% of global revenue. Hence, for many companies, awareness of the EU Act and understanding of its applicability is essential to developing their AI roadmap.

In contrast, the recent executive order on AI by President Trump in the US, which rescinded Biden’s executive order on AI safety, indicates a less stringent regime from the US federal government that may imbalance the AI global powers, with the US now pushing for a much faster and more liberal usage of AI. President Trump also recently announced a private sector investment of up to $500 billion to fund infrastructure for AI, aiming to be front runner in development of business-critical technology.

Furthermore, the disruption caused by the launch of Chinese startup DeepSeek’s low-cost R1 AI model in January 2025, which became the most downloaded free app in the US within a week, is raising questions about the future of America's AI dominance.

Baringa’s view on the draft Ofgem guidance

The current approach proposed in the draft Ofgem guidance seems to lay a sturdy foundation for the introduction of AI in the energy sector. However, we think there are several points (outlined below) that would require further consideration to best allow energy companies to embrace the benefits of AI in a safe, fair, and sustainable way. Key challenges speak to the need for clear rules-based regulation – without this, the sector will struggle to interpret, and the regulator will struggle to enforce, the desired standards.

Regulatory interoperability. Many UK energy companies will be in scope for the EU AI Act, as they may have operations in the EU. To reduce the regulatory burden on energy companies, it would be helpful to have clear principles for interoperability, both for where the UK regulatory approach differs from the EU AI Act and for where Ofgem guidance intersects with other conduct regulations such as consumer duty, the acceptable use of AI for vulnerable customers, or data privacy law.

Specific guidance on AI use cases and risk categorisation of AI models. To support the principle of proportionality, providing examples of common industry use cases, including categorisation of the various AI models and their context would help energy firms understand the exact expectations.

Lack of harmonised ethical standards in fairness and explainability in AI. In the current regulatory landscape, there is little actionable guidance on how to measure, mitigate, or report AI bias. Clear rules are needed to define fair outcomes and explainability. Without this, firms may create their own standards, leading to inconsistencies and potential exposure to scrutiny that could hinder innovation.

Insufficient guidance on testing and validation. Given AI’s dynamic nature, testing and validation are essential for building trust in AI. However, regulatory guidelines are unclear, leading to uncertainty and compliance risks for organisations using advanced AI. AI systems evolve through retraining, so a key question is how to validate and recertify continuously learning AI models and understand when updates require regulatory reassessment.

Customer outcomes and accountability. As AI systems increasingly make decisions affecting customers, the lack of clear regulations on accountability leaves firms vulnerable to reputational damage and regulatory action if something goes wrong, like a biased decision or an error from poor training data.

Considerations for environmental impact. Growth of AI and its infrastructure leads to increased use of electricity, water, unsustainably mined minerals, and electronic waste. It would be prudent to align emerging AI guidelines with wider sustainability and climate-related requirements.

The impact for UK energy companies

Whilst CIOs and transformation leaders in the energy sector are expected to prioritise, closely manage, and deliver a wide range of AI-enabled transformations across their organisation, they will also need to closely monitor developments in AI governance to avoid the possibility of substantial fines or even losing their licenses. Regardless of the final regulatory outcomes, a minimum level of governance and control over AI usage will be expected from UK energy companies.

With the current hype around AI, the temptation is high to apply AI at pace, and organically across various departments in companies with limited controls. We see AI working groups being kicked off and AI champions being nominated across organisations with the goal to identify AI use cases and implement AI-powered proof-of-concepts to modernise operations, HR, contact centre, and more. However, introducing AI in an organic or uncontrolled way is likely to lead to technical and control debt.

If British energy companies, opt to follow only the basic guidelines from Ofgem, they should keep in mind that these guidelines are just the starting point. As the risks of AI become clearer, the rules are likely to get stricter. Furthermore, based on lessons learned from regulation and enforcement on data privacy and the Cyber Assessment Framework (CAF), for which audits are typically evidence-based and requiring timebound improvement plans, we believe that implementing good AI practice ”by design”, much like “cyber/security by design” will be essential to demonstrate compliance.

As per the Ofgem guidance, energy companies will need to be able to demonstrate that they have established robust AI governance and risk management practices. This will need to be implemented across the enterprise with key impacts in IT, procurement, risk, HR, and legal.

Recommendations on implementing and accelerating AI in the energy sector

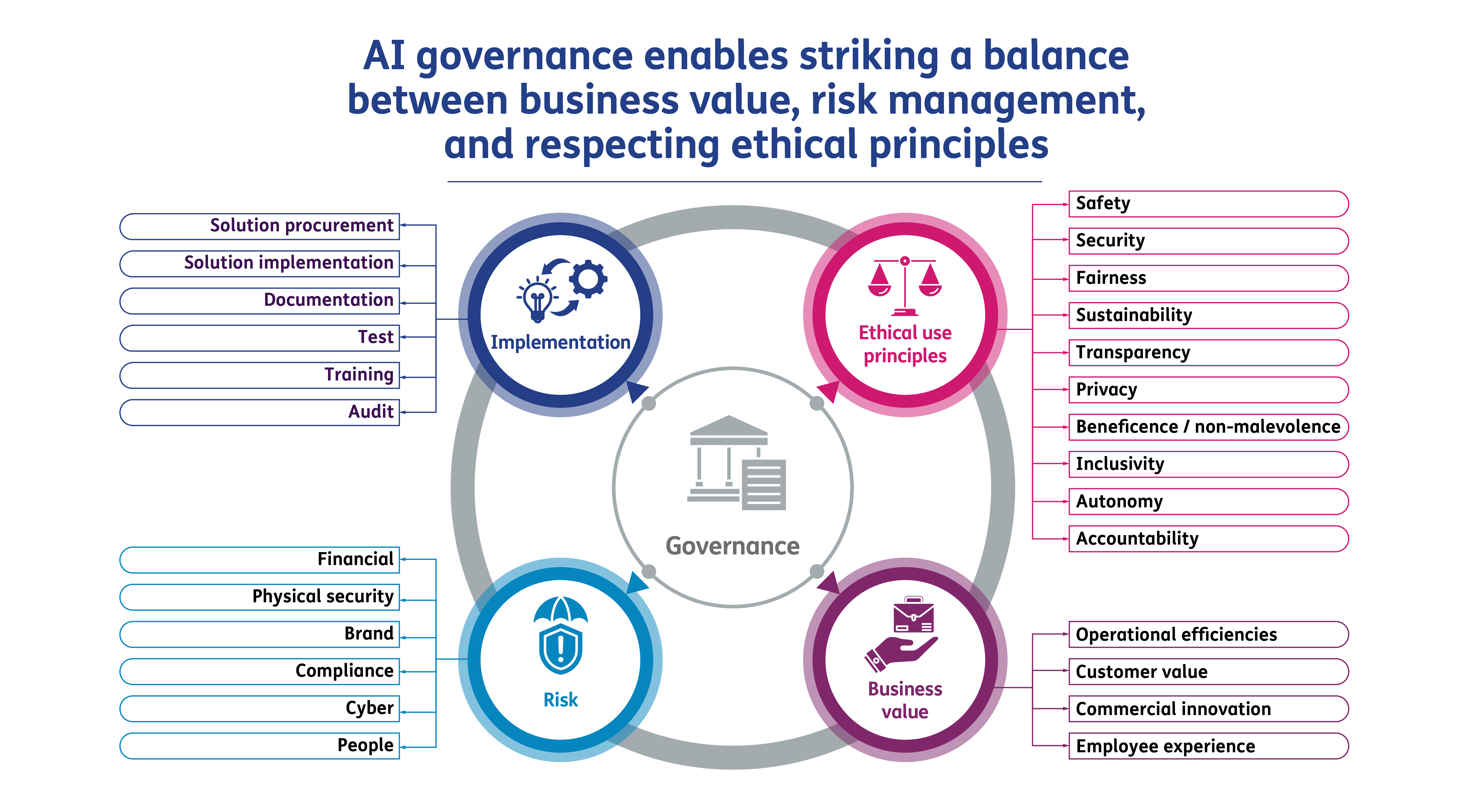

Energy companies aiming to deploy AI across their organisation will need to balance the following priorities:

- Prioritising investments that focus on delivering significant business value, such as competitive advantage, efficiencies, and improved customer experience.

- Delivering and deploying high-quality AI solutions in a controlled and timely manner, ensuring use cases, users, benefits, and risks are fully documented and ethical principles are respected.

- Managing the risks associated with the introduction of AI by implementing the right controls within the organisation and significantly upskilling control departments, so they can cover new metrics such as model bias, explainability, transparency, and AI decision autonomy.

- Ensuring compliance with current and future regulation by keeping up to date on policies and collaborating with Ofgem and other regulatory bodies to clarify and develop clear guidelines.

Whilst it might be tempting to focus immediate efforts on the use case identification and IT implementation aspects, it is advisable to set up central AI governance from the beginning to avoid the potential costs of a retrospective compliance project.

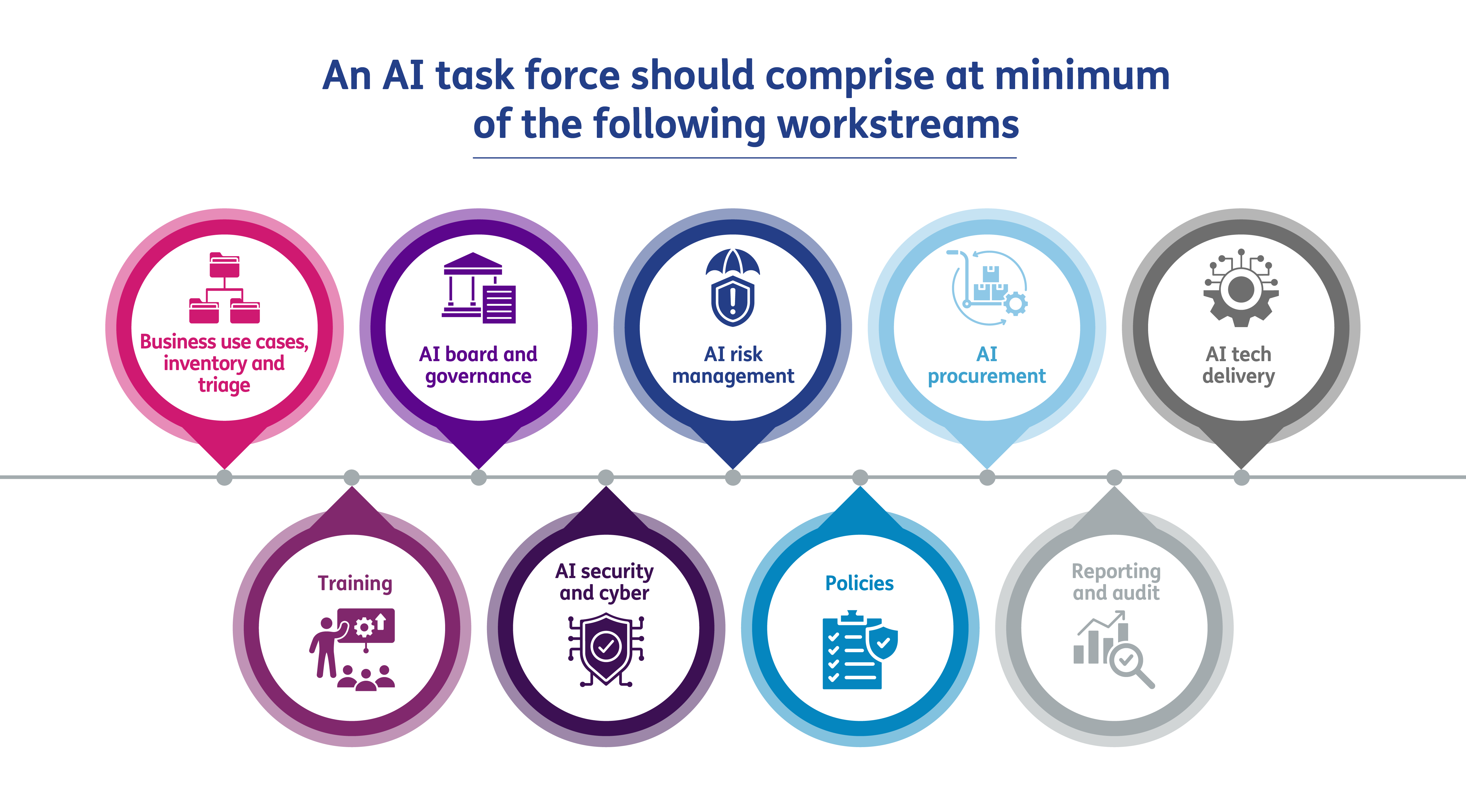

To enable the delivery of the various requirements as requested by the Ofgem guidance, whilst focusing on business value and innovation, we recommend the establishment of a project-like taskforce. This should be supported by representatives from each division of the business to coordinate the activities required across the enterprise (Business Change, IT Change, Training, Legal, Procurement, etc.) until these can all transition to ‘business as usual’ operations. We believe that a well-structured AI taskforce should comprise of the following workstreams:

The AI taskforce should facilitate the accelerated identification and development of use cases. It will be responsible for delivering the AI governance, including the creation of a comprehensive inventory of use cases (live and backlog), detailing their benefits and associated risks. A triage process should be established to determine which AI solutions should be prioritised and implemented. To avoid a parallel process, we recommend that the triage of AI opportunities be integrated into the digital and IT triage and prioritisation process, and based on business value, cost, and risk assessment.

The selection of AI technology should be conducted in alignment with the procurement function to ensure that the chosen solutions meet expected criteria such as quality, reliability, transparency, and sustainability. This means that the procurement framework and criteria will need to evolve to better assess and capture the AI related aspects from technologies and services from providers.

In addition, the AI taskforce should also coordinate AI training efforts throughout the organisation to ensure that staff can use AI effectively, appropriately, and ethically. It should also ensure that policies are updated or created as needed and that risk management, cybersecurity, and reporting and audit activities are adequately covered.

Finally, the Ofgem guidance requires the establishment of an AI Board and the appointment of an AI Officer. Depending on the company’s current set-up and to limit the need for yet another governance body within the organisation, it may be more efficient to instead extend the duties of the company's board, steering committee or the Data Management Board to serve as the AI oversight committee, provided that board members are sufficiently educated in AI and risk to be able to make informed decisions.

Summing up

Implementing AI without a holistic enterprise view and clear governance, energy companies face the danger of stifling agility and innovation – or taking on unacceptable risk. While robust governance is essential, it should be designed to enable value creation. This means making sure AI initiatives are aligned with strategic objectives, deliver measurable business outcomes, and are evaluated based on metrics such as efficiency, effectiveness, risk, and ethical compliance.

At Baringa, we help energy companies establish the critical foundations to secure their digital enterprise, shaping strategy, developing capability, and ensuring security and resilience.

- We help you understand AI risks and design tailored strategies to manage them across the enterprise. This helps with unlocking funding, driving effective governance, and establishing appropriate security to underpin successful transformation.

- We design and implement capabilities and operating models that are integral to your business and evolve with it. We help you build future-fit skills and capabilities across areas including cyber-physical risk, third-party risk, data privacy risk and safe use of AI.

- We work with you to protect your critical business operations, assuring capabilities and regulatory compliance. We ensure that you are ready to respond to incidents, manage the event, and remediate rapidly afterwards, giving you – and key stakeholders – confidence in your resilience.

Ultimately, Baringa enables energy clients to focus on the bigger goal – achieving successful digital and AI transformation to drive innovation, growth, and compliance. With our deep expertise of the energy sector and digital and AI, we help you navigate, so you invest only in what is necessary to build a world-class, right-sized capability.

Get in touch with Anne-Laure Mersier or Silas O'Dea to find out more.

Our Experts

Related Insights

How do super CROs navigate the double-edged sword of AI?

Explore how AI is transforming risk management for superannuation funds and the strategies Chief Risk Officers are using to navigate the complexities of the modern financial landscape.

Read more

Data-driven decarbonisation: how analytics and AI are supporting the energy transition

Data and AI are key to enabling mass-market decarbonisation. From standardisation to sharing, everyone has a role in ensuring their approach delivers industry-wide benefits alongside individual competitive advantages.

Read more

The role of digital technology in the energy transition

With so much at stake, we see four digital technologies standing out as key.

Read more

Leadership Dialogues: Data management and workflow solutions

In this video, Baringa's Lucine Tatulian speaks to Roy Saadon, CEO and Co-Founder of Access Fintech about data management and workflow solutions.

Read moreIs digital and AI delivering what your business needs?

Digital and AI can solve your toughest challenges and elevate your business performance. But success isn’t always straightforward. Where can you unlock opportunity? And what does it take to set the foundation for lasting success?